I’ve learned a good bit this week about tools and techniques for animation for VR. The first technical hurdle I solved was the high image size/high frame rate of the rendered sequences issue I mentioned in my previous post. I found a simple (and free!) solution was to use Blender to comp the image sequences and render out Mp4s. Not being a regular Blender user I was surprised to see how capable and flexible it is for this but it’s really simplified things for me. No more need to render uncompressed AVIs from AE now and compress them in Handbrake, Blender handles exporting to Mp4 beautifully.

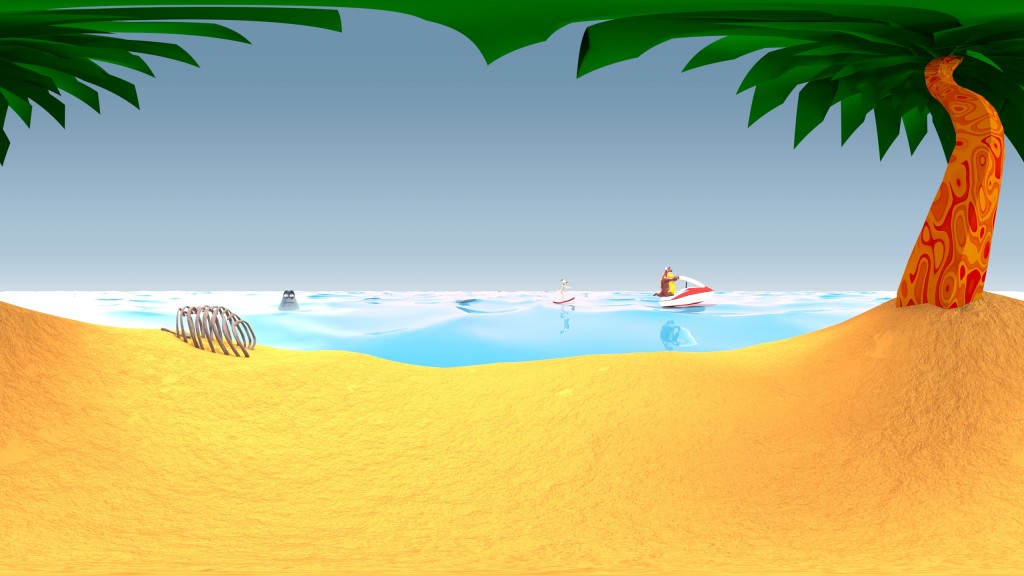

The other thing I’ve been exploring this week is Stereoscopic VR. A mono 360 video is nice.. but once you add depth with a stereo version it’s a whole lot more visually interesting and immersive. Of course that rears one issue though – a time effective way of rendering in stereo. At the beginning of the week I started testing the demo version of Redshift for Maya which has mono and stereo spherical cameras and was blown away with how fast it is on my machine as it uses the graphics card (I’m currently using a GTX 1080) rather than the CPU to do the heavy lifting in render calculations. After a few days of testing with watermarked images I took the plunge and bought the plugin. I rendered a 400 frame animation test overnight last night at 2880×2880 and it did it in just over 8 hours with very acceptable levels of noise. The attached image here shows one side of the stereo image, rendered in Spherical format which is compatible with Facebook 360 photos.

The great thing with Redshift is that you can turn the samples right down and get a quick and dirty preview to check animation before commiting to the overnight render, I got frames down to about 3 seconds each for these tests which is even faster than what I was getting with Playblast VR. I do a lot of checking of these in the Gear VR to see how scale, distance and other factors are working in the animations.

For those interested in sharing their 360 renders on Facebook there are a couple of tricks to getting Facebook to understand that it’s a 360 photo, one is to make sure the image has a 2:1 aspect ratio and the other is to embed camera metadata in the image file that makes Facebook think that it’s a photo taken by a 360 camera. For that I used Exiftool. Once you’ve got this working anyone on Facebook with their smartphone can look around the image with their phone acting as a virtual window into it. The Facebook app on Android also directly supports the Gear VR with a ‘view in VR’ option appearing on the 360 image which when clicked asks you to insert the phone in the Gear and it’s right there to look around in. Very cool. Right now I’ve yet to experience a Facebook friend trying this option but with Gear VR sales being over a million by now I believe it’s only a matter of time.

So now I’m happy that I have a practical one-man pipeline set up for animation testing, currently I’m working on the look of the environment and populating it with interesting things to keep the viewer looking around in this virtual world.